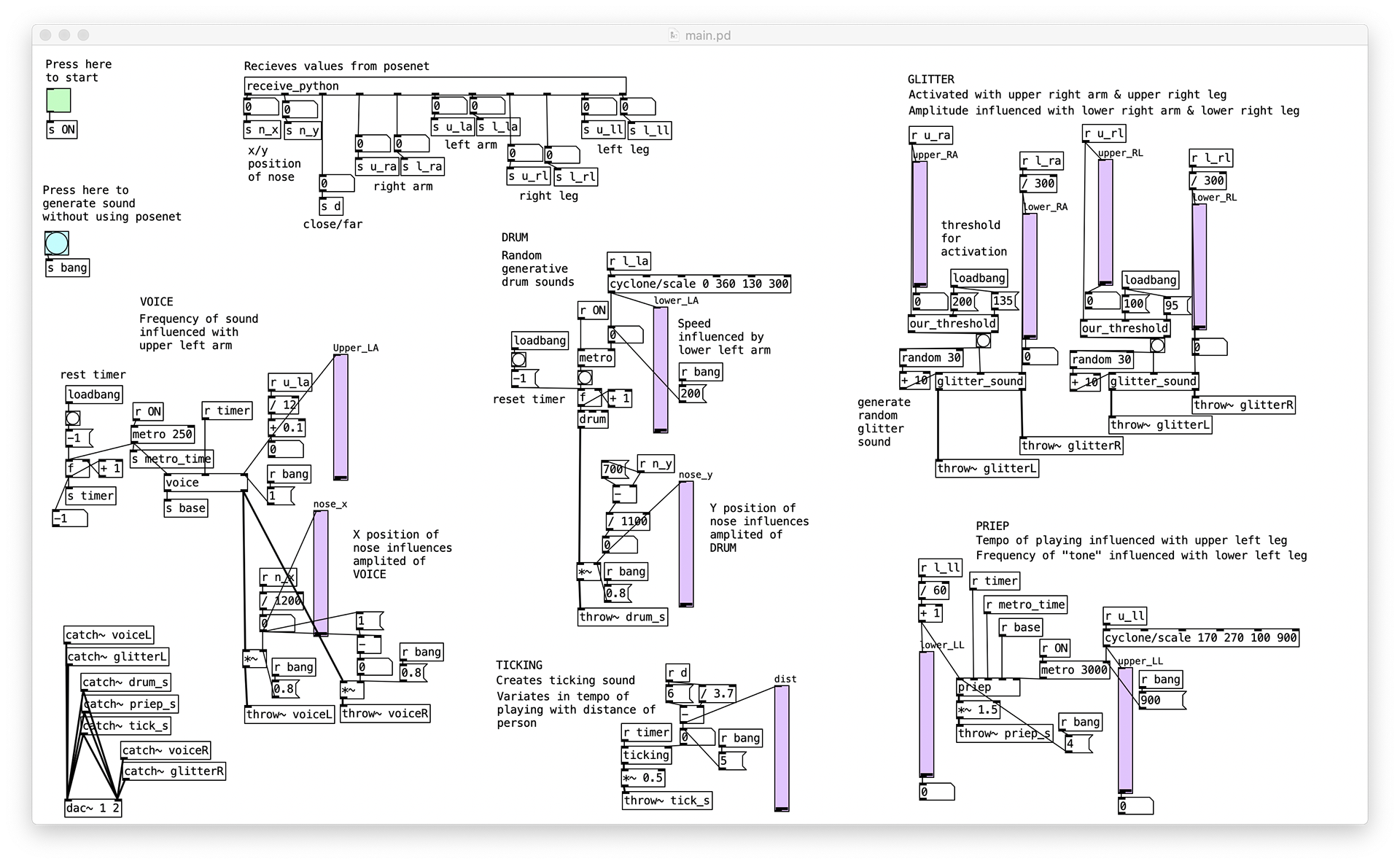

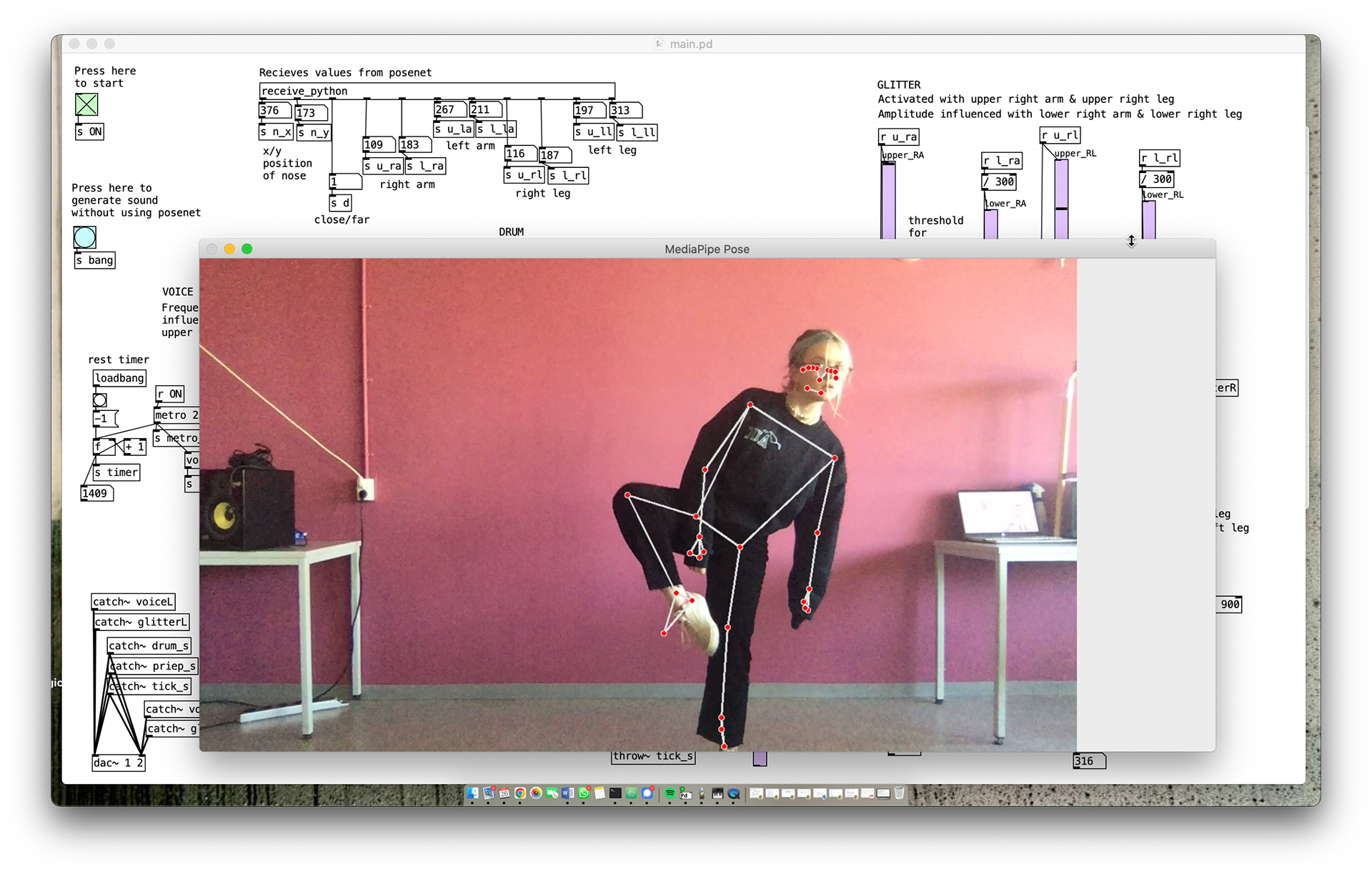

An Interactive sound experience that generates sound on the basis of the user’s position in space and the movements and angles of their arms and legs.

Using PureDate and MediaPipe’s PoseNet to turn the body into an instrument. Every change in limb angles and proximity influences the music being generated.

Link to GitHub: https://github.com/zoebreed/Sound_Space_Interaction

Keywords: Interactive Sound Installation, PureData, PoseNet

In collaboration with Joyce den Hertog